We recently came across a requirement that could be solved by doing 1 of 2 things.

- Create a huge case statement

- Use a dynamic where clause

The case statement idea seemed the easiest, but would have been around 100 cases long, and if any changes needed to be made, it would require changing the ETL and re-releasing the code.

Instead we decided to store the where clauses in a spreadsheet, and then use that to drive output rather than the case statement. We could have gone with a table, and then built a form on top of it, but a spreadsheet was the simplest and quickest solution.

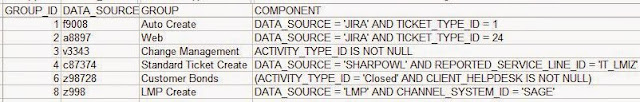

Here is an example of what we were dealing with:

Our group dimension was defined by the component column, and went on for an additional 94 groups. Because each value definition in this dimension was not based on the same set of columns we couldn't use the standard lookup_ext function, or a join.

We did consider using a case statement, but then that would have been huge, and it was anticipated that changes would be made to these definitions and we didn't want to have to re-release the ETL every time a change was made.

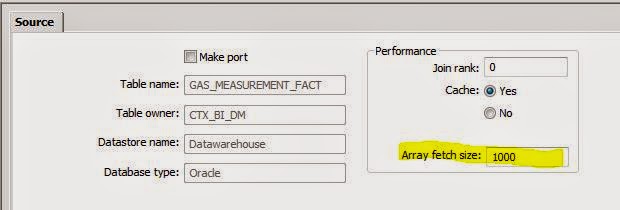

Getting dynamic sql into your Data Services query is done by using the pushdown_sql function, and feeding in whatever sql you want it to use.

The challenge for us was to make sure that we went through each of the rows in our table, and applied the where clauses one by one.

The first thing we did was copy all the dimension data into a working table, and added an extra column called processed and defaulted all the values to N. We did this so that we'd have a list to step through and mark off each time we'd processed a record.

When then setup a loop to go through each of our rows and process them.

The script Processed_Count initialised the counter variable for our loop.

$G_ProcessedCount = sql('Datawarehouse','select count(*) from WK_DIM_GROUP');

The inside of our loop looks like this:

($G_ProcessedCount >= 1) is there as our loop control. Once that variable is 0 we have processed all the dynamic where clauses in our list.

The Set_Variables script is where we setup the dynamic where clause, and the value that will be assigned to the rows that filter through the where clause.

$G_GroupID = sql('Datawarehouse','select min(GROUP_ID) as GROUP_ID from WK_GROUP where PROCESSED = \'N\'');

$G_GroupLogic = sql('Datawarehouse','select a.component from WK_GROUP a where a.GROUP_ID = [$G_GroupID]');

$G_GroupID will hold the key that we want to assign to the rows. We select the min because we want the first row that has not been processed yet. As we step through the rows and update the processed rows to Y, we will end up moving to the subsequent N rows until all of them have been processed and updated to Y.

$G_GroupLogic will hold the where clause you need to filter down the rows.

Now all we need to do is apply that in a query.

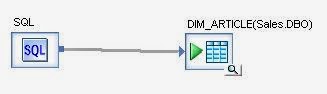

In the above picture that is done in the data flow called DF_WK_GROUP_ID_Load.

pushdown_sql('Datawarehouse', '{$G_GroupLogic}' ) becomes the where clause for our query.

$G_GroupID is mapped to the column that holds the dimension table foreign key.

Now you just need to update the row you have processed in the working table from N to Y. We do that in the DF_WK_GROUP_Update data flow.

Finally, update the $G_ProcessedCount variable in the Update_CountVariable script:

$G_ProcessedCount = $G_ProcessedCount -1;

We'll keep on going through this process until there are no more rows to process.