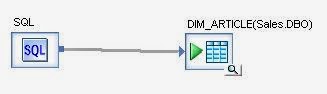

If you are loading a dimension, and you are using history preservation, add a line in the filter field to only bring through current records.

This helps you with two things, one obvious, one not so obvious.

The first thing thing it helps you with is a little bit of a performance boost. If you think about it, you only need to compare against the current record of a given dimension value. Setting the generated key column makes sure the comparison only takes place against the most recent row, but the table comparison transform still has to go through all the records to get there. If you filter only for the current records, then you can compare against a smaller record set and get through it just a little bit quicker.

Imagine you have a roughly ten rows of history per dimension, this can result in a record set one tenth of what you had previously. It may not get you that much of a performance gain, but when you are trying to get your jobs down to near real time, then every second counts.

The second thing this helped with is dealing with expired historical data that becomes current again. Let me give you and example.

This company had an asset team that existed between 1-JAN-2013 and 1-JUL-2013. So I ended up with a record in the asset team table that looked something like:

Team A became active again on 1-JAN-2014.

The problem I had was that before I put the filter in the table comparison to only bring through current records, the table comparison transform was ignoring Team A. It was doing this because it would look in the target table for a record with a natural key of A. It found the old record there, and because the effective dates are set by the history preserving transform, it was ignoring it because everything else was the same. I needed it to process this record as an insert so that the history preservation transform would process it correctly with the new effective date and CURR_IND equal to 'Y'.

By putting the filter in, I was filtering out the old Team A record from my comparison data set. That way the table comparison saw this as a new record and put it through as an insert. This is a fairly unique case where you have historical records for a dimension value but none of them are current.

I hadn't actually encountered this scenario while working in previous versions of Data Services, but if I had I think the best workaround would have been to create a view where you filter for current records, and then use that as the comparison data set.