Join ranking

You can define the order in which Data Service will join tables. Tables with higher join ranks are joined before tables with lower join ranks. Typically you want your largest table to have have higher ranks that your smaller tables.

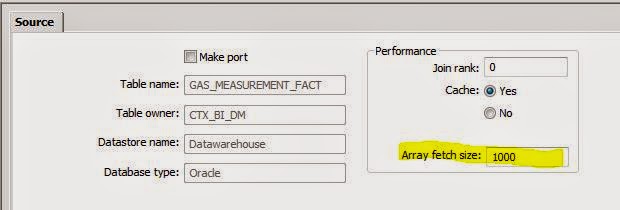

You set the join rank in the source table.

This typically only has an effect if the join is being done on the Data Services job server, in other words, Pushdown SQL is not happening. For a full and detailed explanation of how this works consult the Performance Optimzation Guide for DS.

Array fetch size

If you have a powerful set of hardware in place, try raising this value and see what effect it has. Interestingly, even the DS Performance Optimisation Guide just recommends increasing and decreasing the value to see what effect it has, and just going with the value that seems to get you the best performance.

Array fetch size is also set on the source table.

Rows per commit

SAP recommends that you set this at between 500 and 2,000. Again, I just recommend changing the values up and down to see which seems to have the best effect.

Two things to consider:

- If you have Long datatype you are loading, then the commit size will be 1 (Does not apply to Oracle)

- If you are using an overflow file, and an error occurs resulting in a rejected row, then the commit size will also revert back to 1. I'll be doing a post soon on the pros and cons of using the overflow file vs using the validation transform.

Committing one row at a time will be slower than committing 1,000. So consider whether you can load the Long differently (perhaps in a large Varchar field) and decide whether you really need an overflow file.

Chat to your DBA

Seriously, some of them are actually nice people :-) Now that you've fully tuned what you can on DS, the DBA can look at making improvements in the source and target databases.

Database performance tuning can be different depending on which database you are using, and being an expert in performance tuning on all the databases that DS can connect to would be quite a feet!

So, if you have a Data Flow that is still running too slow then just go to Validation - Show Optimized SQL, and send it over to your DBA to see if she can work some of that DBA magic.

Anything else?

If you've found something that has significantly improved the performance of one of your Data Services jobs that I did not mention in this series, then please let every-one know in the comments.

No comments:

Post a Comment